1

A Long Festschrift: To Pass the Torch Which Way?

Of Cheese and Cheese and Chalk, and Sealing Wax, and Psychology, IT and Kings.

Dr. Dan Diaper

Introduction to Dan DiaperI have known Dan Diaper, on-and- (more recently) off, for 37 years. With any luck, I hope we will both be alive to celebrate our 40th (so to speak). Late 70s, at the MRC APU, Cambridge. Early 80s, working together at the EU/UCL. Late 80s, collaborating on a Research Council funded project (with Peter Johnson). And we both survived to tell the tale – well, perhaps two rather different tales.

Dan needs no introduction from me. His place in the HCI Discipline Pantheon (my capitals) is secured by his work, including: TAKD (Task Analysis for Knowledge Descriptions); SAM (Systemic Systems Analysis); TOM (Task Oriented Modeling); TA (Task Analysis) for HCI etc. His place in the HCI community is secured by his very presence (once met, never forgotten) and his commitment to his research (relentless and unremitting). There are times, when Dan makes even a drill look as if it’s not trying hard enough.

As well as not needing an introduction from me, there is a further reason for my providing only a brief introduction. Dan’s Festschrift submission paper in fact does the job for me – himself introducing himself. Indeed, he even introduces me as well (OK it’s my Festschrift). Buy one get one free? I let the reader judge.

DDD SYSTEMS, 26 St. Marks Road,

Bournemouth, Dorset, BH11 8SZ, U.K.

ddiaper@ntlworld.com http://www.dddsystems.co.uk/

Tel. +44 (0)1202 523172 Mob. +44 (0)7840 539957

Introduction to the PaperAlthough Dan himself needs little, if any introduction from me, his paper does.

First, I encouraged him to submit a paper in the following words: ‘… your assertion that I had influences…. on your work intrigues me. Obviously, we influenced each other, so I am not claiming that I had no influence on your research/thinking. But what exactly and how did it come about – both technically and personally? What’s interesting is that you are in a very unique position to partition my ‘influences’ between pre- and post-conception. You were in the Ergonomics Unit before the Conception beast got going. You also knew me in Cambridge and my applied psychology beginnings with Donald Broadbent…… This would be an unusual type of contribution; but very much in the spirit of a Festschrift (see definition attached).’

Second, I read a draft of the paper and suggested Dan add a reference to MUSE (Method for Usability Engineering (Lim and Long, 1984) as concerns HCI design methods and to EU work on HCI Engineering design Principles (Cummaford). References to both were subsequently added to the draft.

Third, I was privy to the reviews of the paper (in strictest confidence), after its rejection for the Festschrift. I would like to make clear, that I had no quarrel with the reviewers’ recommendations, nor with the editor’s decision not to accept the paper. I regretted its rejection, of course, and wrote to Dan as follows: ‘Dan Hi! Sorry to hear this (… rejection of his Festschrift submission…) but we knew it was a risk. …. The real problem is that the type of paper that it espouses to be is not well specified, nor is it easy to specify such an unusual paper. So it is easy to shoot down as not being one thing or the other. Had the editors run with the underspecification e.g. to liven up the FS, you might have got away with it.’

Fourth, I came across the paper recently, while archiving. Having re-read it, I thought it would sit well with other EU papers submitted; but not accepted by the Festschrift. It discusses the EU work knowledgeably and seriously. It also raises some important and interesting issues. Dan is also not shy to characterise our persona and the resulting relationship and interactions. The result is very funny. I suggested its posting here and Dan accepted.

Abstract

Professor John Long has influenced the author’s research over more than thirty

years. Task Analysis for Knowledge Descriptions (TAKD), for example, directly

arose from their work, with Peter Johnson, in the early 1980s at the Ergonomics and

HCI Unit (EU) at University College London. Many aspects of the Conception of the

General Design Problem developed at the EU have driven the author’s research,

most recently in general systems analysis and culminating in the Systemic Analysis

Method (SAM); seven criteria are listed where the SAM is better than the, now

retired, TAKD method. Some aspects of the Conception are questioned, however,

notably the nature of design principles and, in some design contexts, how desired

performance can be set so as to be a driver of design, rather than having only an

evaluative function.

Comment 1The issues of Design Principles and Desired Performance are addressed later in the paper

Notwithstanding such caveats, the influence of Professor John

Long on the research history reported has been both large and profound.

Keywords: Cognitive Engineering, Human-Computer Interaction, Design, General Systems

Analysis, Task Analysis, Conception of the General Design Problem.

Introduction

In an email, Professor John Long wrote to the author in early 2009, “… your assertion that I had

influences … on your work intrigues me. Obviously, we influenced one another, so I am not

claiming that I had no influence on your research/thinking. But what exactly and how did it come

about – both technically and personally? What’s interesting is that you are in a very unique

position to partition my ‘influences’ between pre- and post-conception. You were in the

Ergonomics Unit before the Conception beast got going. You also knew me in Cambridge and my

applied psychology beginnings with Donald Broadbent … This would be an unusual type of

contribution; but very much in the spirit of a Festschrift (see definition attached)”.

From John’s Wikipedia attachment, “In academia, a Festschrift is a book honoring a respected

academic and presented during his or her lifetime. The term, borrowed from German, could be

translated as celebration publication or celebratory (piece of) writing.”

Cambridge

Around the late 1970s, Cambridge was home to a couple of hundred psychologists, a galaxy of

stars, including people who had written papers that had impressed me as an undergraduate,

particularly in my own area of devotion, cognitive psychology. The shrine in this field, however,

was not the University’s Department of Experimental Psychology where I was studying for my

doctorate, but the Medical Research Council’s Applied Psychology Unit (APU) in Chaucer Road,

whose Director, before my time, Donald Broadbent, was John’s doctoral supervisor. The APU

was my intellectual home, actually a Victorian mansion by today’s standards (and a lot of

caravans outback), and I spent much time there as I lived in a house in Chaucer Road for years.

I remember Dr. John Long at the APU, in seminars, at numerous parties, and once, as I descended

the APU’s main staircase, John was at the bottom,

“Dan! How are you?”

“I’m confused.”

“Ahh, but you have that rich, deep confusion that we should all strive for.”

John had, unwittingly, given me one of the best compliments of my life, there have been few

better since. Unlike most of our colleagues, John and I understood, without need for discussion,

that a contrived confusion was a necessity for getting to grips with the truly complex, and

scientific psychology was, and is, truly, truly complex. That complexity was one source of our

passion for psychology, but then John and I are much alike in many ways, more as two cheeses

than in the adage, “as different as chalk and cheese”. John, and I, alone are likely to question that

saying, “Why chalk and cheese?”, “They’ve a lot of similarities, colour, high in calcium, …”, but

together we explosively explore whatever topic is to hand. Our joy, but I’ve noticed often the

glazed eyes of those within earshot.

John left Cambridge in 1979 to be the Director of the Ergonomics Unit (EU) at University

College London, which later, reflecting his research interests, became the Ergonomics and HCI

Unit. I continued at Cambridge until 1982 when I submitted my dissertation. I was comfortable

in Cambridge, earning a reasonable living supervising undergraduates for half a dozen colleges,

but John Long was one of the brightest people I’d ever met in Cambridge, so, comfortable or not,

when a Research Assistant (RA) post was advertised at the EU I applied; there were only a couple

of other people in the world who I wanted to work with.

The Ergonomics and HCI Unit

With his utter, open honesty, another cheese and cheese trait John and I share, John was explicitly

doubtful that I could make the necessary change from theoretical, scientific psychology to applied

psychology as practised at the EU. Even so, I was sufficiently convincing, only just, I think, that

John took me on.

My first EU project with John was on Peter Johnson’s IT syllabus design project, funded by the

U.K. Government’s Manpower Services Commission. Out of this project, within months of my

starting, came the first version of Task Analysis for Knowledge Descriptions (TAKD).

I was having dinner in a restaurant with John around 2005 when I was surprised to find that he

had forgotten my analysis, that I have also explained to others in a range of contexts, of how we

developed working together in the early 1980s. The rules of the intellectual game were simple,

we would continue to discuss some topic at a high level (i.e. in general, abstract terms) until one

of us challenged a high level proposition in the argument chain. At this point the proposer would

have to immediately unpack the proposition at progressively lower levels of detail until the

proposition was either accepted by the challenger, or both of us agreed to reject or modify the

challenged high level proposal, which we then proceeded to do, before returning to our high level

of discourse.

Comment 2Readers wishing to join in the fun might start with the proposition: ‘ Worksystem performance does not require reference to its domain of application’ and then follow the two threads of ‘Usability’ and ‘Task Quality’, as the lower level of description. Readers who think that the decompostional strategy offers more to operationalisation, than to concepualisation (as a preliminary to test) may have a point.

It was very efficient, but relied on a great deal of intellectual trust between us; we

spoke in a shorthand, and it was often confusing for outsiders. It’s not that this sort of shorthand

between co-researchers is uncommon in the specialist area in which they work, but John and I

applied it to everything, from the pros and cons of his Moto Guzzi motorcycle, to chalk and

cheese, and, if it had cropped up, to the value of sealing wax as a communication security system

(one of us would have asked whether the other meant verification or validation, I’m sure).

Happy days, but the seeds of our intellectual divergence were sown. After my Cambridge years I

was already more comfortable with computers and programming than John. After my broad self

education in IT in my first EU project, my next one at the EU, funded by the U.K. Government’s

Science & Engineering Research Council, was on AI, on Expert/Knowledge Based Systems, in

particular, on natural language processing intelligent user interfaces.

Peter Johnson made the initial move towards transferring TAKD from a syllabus design tool to

more general HCI and UID applications (Johnson, 1985; Johnson et al, 1985), but by the mid-

1980s HCI was a major concern for most of the maximum of a dozen or so researchers in the EU

(John’s view was that if there were more than a dozen, then he wouldn’t be able to be sufficiently

involved in all the EU’s research; many years later in a similar situation I agreed with him, but

typically, more extremely than John; he’s always been the more mature cheese, and this has

nothing to do with age).

John prefers to think of himself as a cognitive engineer, his concern primarily being with people,

work and performance, in systems, which isn’t to deny his considerable knowledge and expertise

in other fields, including IT. I, however, was in ready agreement with Russel Winder who I’d

met soon after we became committee members of the then newly formed British Computer

Society’s HCI Special Interest Group (now the British HCI Group). As a computer scientist,

Russel’s view was that HCI folk didn’t build computer systems, computing people like software

engineers did that. Even before I left the EU in 1986, I was already turning to software

engineering (Diaper, 1987), and although I never stopped being, and developing, as a

psychologist, I stopped teaching psychology in 1989 and moved to a fairly traditional Department

of Computer Science at Liverpool University (“traditional” in the sense that computer science is a

branch of, usually discrete, applied mathematics and Liverpool’s Department was part of its

Faculty of Mathematics).

Before I left the EU, John, with John Dowell, first his masters and then his doctoral student, were

developing their Conception (Long and Dowell, 1989), and which is still best expressed,

according to John, in their Ergonomics paper (Dowell and Long, 1989).

Comment 3To be precise, Long and Dowell (1989) proposes a General Conception for the Discipline of HCI, which includes specific conceptions for a Craft, an Applied Science and an Engineering Discipline of HCI. It also includes a summary version of the General Design Problem for HCI.

Dowell and Long (1989) assumes the Discipline Conception of HCI (Long and Dowell, 1989) and proposes a complete Conception of the General Design Problem for HCI, a summary of which appears in Long and Dowell (1989).

Thus, the Discipline Conception is ‘best’ expressed in Long and Dowell (1989) and the General Design Problem for HCI is ‘best’ expressed in Dowell and Long (1989).

I was with John,

physically and intellectually, in the early stages of the Dowell and Long Conception’s

development. Chalk and cheese, however, John’s focus was more the human factors of HCI

whereas mine was becoming a broader HCI, subsequently (Diaper, 1989a), and oft repeated (e.g.

Diaper, 2002a), defined as “everything to do with people and computers” and which placed a

more equal focus on human and computer aspects.

Comment 4There are two separate issues here – the scope of HCI (as relates to ‘everything’) and the ‘balance’ between human and computer in HCI.

Concerning the ‘scope’ of HCI, Long and Dowell delimit it both with respect to the Discipline and its General design Problem. This, then, is a rejection of Diaper’s ‘everything’.

Concerning the balance within HCI, Dowell and Long (1998) clearly argue for such a balance, in their terms, between Cognitive and Software Engineering. Indeed, ‘Cognitive’ was selected, in part, to balance ‘Software’.

It is, of course, true, as claimed by Diaper, that at the time, Long and Dowell’s research focussed on Cognitive Engineering, while Diaper’s research was developing in the direction of Software Engineering.

I am indebted to John Dowell for pointing out

to me that my “everything” seems seriously at odds with the Dowell and Long Conception,

Comment 5Indeed, Diaper’s ‘everything’ is at odds both with the Discipline and the General Design Problem Conceptions for HCI (see above).

which wanted to define the engineering discipline in advance,

Comment 6The motivation of the Discipline Conception (Long and Dowell, 1989) was not to pre-define the final expression of HCI per se; but to provide different conceptions (Craft; Applied Science; and Engineering), which would support the development of HCI, of whatever kind. ‘In advance’ here, then, may be a bit misleading.

whereas my definition might imply some

ad hoc accretion and an acceptance that a unified, integrated discipline of HCI, cognitive

ergonomics, or whatever it is called, won’t be achieved.

Comment 7‘ Ad hoc accretion’ would not be inconsistent with a Craft Discipline of HCI. A ‘unified, integrated discipline of HCI’ would not be inconsistent with an Engineering Discipline of HCI, as described by Long and Dowell (1989).

Indeed, Green (1998) in a commentary

paper on Dowell and Long actually argues that, “The case for a single unified model has not been

made. Instead of seeking for a single unified model that encompasses all aspects of a worksystem,

one might propose a ‘network of limited theories’ (Green 1990), each responsible for its own

ontology and for successfully interfacing with its neighbours (See also Monk et al. 1993 for

similar views).”

Comment 8This point has been addressed in Comments and above. However, Green prompts an interesting question of what would be the difference between ‘a single unified model…….. that encompasses all aspects of the worksystem’ and a ‘successfully interfacing of a network of limited theories, each responsible for its own ontology’? It would be of interest for the comparison to be expressed, for example, as concerns ‘Task Quality – how well a task is performed (Dowell and Long, 1989).

Both John Long and I, and we were far from alone in the 1980s, believed that what was needed

was an engineering discipline that would contribute to IT systems development for the betterment

of people, the users, owners and those affected by such systems . John has been, and remains

today, steadfast in his commitment to this sort of solution, the development of such an

engineering discipline, although he currently prefers the term cognitive engineering to human

factors, in which knowledge about how systems can be reliably designed would prescribe both

design processes and what was designed.

The Dowell and Long (1989) paper’s abstract is explicit, that the paper first “examines the

potential for human factors to formulate engineering principles. A basic requisite for realising

that potential is a conception of the general design problem addressed by human factors.”

Twenty years later, John continues to reflect on why the Conception of the General Design

Problem, he does prefers it capitalised,

Comment 9Capitals are used to refer to an instance of anything, for example, the Long and Dowell (1989) Conception for a Discipline of HCI. However, there are many different ‘conceptions of the discipline of HCI’ and the latter description would not be capitalised.

failed to be adopted by others, other than primarily by his

EU colleagues and students (see this volume).

Comment 10It is true that the Conceptions approach to HCI Engineering, as proposed by Long and Dowell (1989) and Dowell and Long (1989 and 1998), has never been the HCI flavour of the month, although it had its moments in terms of conferences and journal publications and still does. Citations and full text requests could be worse after 26 years, especially in a fast moving field like HCI. I do not disagree with Diaper here; but merely point out to him and others, that in my (albeit long-term) view, the chips are not yet down (in spite of much text book and CHI flag waving on the matter). This is not the place for me to address the adoption issue, certainly in any detail. Diaper, however, makes a good enough job of it for the purposes in hand.

The simple answer, one much too simple, alone,

for John or I, is that it was all too complicated. John suspects that his Conception required a very,

perhaps too, radical change in perspective. I’m not so sure, suspecting, amongst a number of

factors, that the delivery of the Conception was weak because it was incomplete and lacked

convincing examples

Comment 11The Conceptions, indeed, lacked convincing examples. I entirely concur. Hence, the importance I attached to Diaper referencing the Cummaford work (2007), in which we propose preliminary and putative HCI Engineering Principles to make good this deficiency as well as one way forward for their acquisition. An earlier, comparable proposal also appears in Stork (1999).

However, I consider the Conceptions to be complete for the purposes in hand, that is, to be operationalised and tested for the purposes in hand of acquiring initial and putative Design Principles. The work of Stork and later of Cummaford provide support for my contention. However, the proof of the pudding remains in the eating, that is, whether such principles can be acquired, tested and generalised. I am unpersuaded by the claim of weakness here. Persuasion would need better substantiation, supported by appropriate argumentation and rationale.

and, as John has pointed out to me, his timescale for developing design

principles was as a very long term objective; too long for the software industries, I think.

Comment 12Indeed. The Laws of Thermodynamics were apparently preceded by a 100 years of locomotive boiler making.

The TOM Project

In the late 1980s and early 1990s, the 2 Johns, Long and Dowell, Peter Johnson and I, with people

from Logica and British Aerospace, came together on the U.K. Government’s Engineering and

Physical Sciences Research Council funded Task Orientated Modelling (TOM) project. The

TOM project was to research, in what were the early stages of proposals, the further

computerisation of Air Traffic Control (ATC), particularly, replacing paper with electronic flight

strips. John, Peter and I had different solutions to how to achieve project and discipline progress,

and we had different agenda and interests. John was committed to his human factors engineering

discipline (Long and Dowell, 1989), Peter was already in a department of computer science and I

was moving to one. Peter would build prototype systems using formal methods, and I was into an

attempt to address the “delivery to industry” problem (Diaper, 1989b). We carved the project

into three strands based, it should be stressed, on the Dowell and Long Conception although, in

honesty, these strands were then developed independently within the TOM project, but with

regular exchanges of both ideas and, later in the project, data. The Johns developed the

application domain model (TOMDOM); Peter the device model (TOMDEV); and I the user model

(TOMUSR), which would be based on applying TAKD to ATC personnel and for which we, my

RA Mark Addison and I, developed a software toolkit (LUTAKD), in an attempt to make the far

too complicated TAKD method much easier for others to apply (Diaper and Addison, 1991;

1992). I publicly abandoned TAKD, after starting its development at the EU in 1982, in Diaper

(2001a).

The TOMDOM was a model of the ATC domain of application, about aircraft, airspace, airports,

radar, communications, and so forth. The basic architecture was that the TOMUSR and the

TOMDEV, which models users and devices (flight strips in this project), respectively, would

combine to form the TOMIWS, the Interactive Work System. Work, the safe and expeditious

passage of aircraft, is achieved by this work system making changes to the application domain

and dealing with feedback from the domain.

Comment 13An admirable summary of a complex; but well-conceptualised project. No comment (so to speak).

While the TOM project was successful in many ways, one significant failure was to provide John

with the sort of metrics that he wanted to measure work, and, especially, to provide measurements

of both desired performance (PD) and actual performance (PA). One aspect of design principles

that John has consistently stressed to me over the decades is that such principles must include

such performance metrics, i.e. they are a necessary condition of being Conception design

principles but, of course, this condition is only a small fraction of what else such principles must

or might contain. Being a necessary condition, however, I concentrate some effort below on what

I think are problems with PD and PA because, if these are problematic, then, being necessary, there

would appear to be difficulties with the Conception’s design principles and an analysis of this

might provide one example, technically inspired insight into why Dowell and Long’s Conception

was not more widely adopted.

Comment 14Other researchers have applied the Conceptions descriptively, for example, in the Festschrift, Wild and Salter (2010) to service and to economic market design respectively. However, I know of no attempt to use the Conceptions for the acquisition of HCI Engineering Design Principles, other than my work with Stork (1999) and then with Cummaford (2007). Accounts of the limited application of the Conceptions need to distinguish these two cases, as well as the application of acquired Principles, as the reasons are likely to be different.

During the TOM project we lost one of our commercial collaborators, who were going to use the

TOMDEV and TOMUSR, with the software tools Peter and I developed with our RAs, to create

TOMIWS models that would interact with the TOMDOM that our lost collaborators would also

create. This uncompleted strand to the TOM research programme might have produced the

metrics for PD and PA that John desired. I’m not, however, convinced that this would have

happened.

For the TOM project, because ATC is a safety critical system, then with paper flight strips both

PD and PA are high

Comment 15Such claims need to express Performance both in terms of Task Quality (how well a task is performed, here air traffic management (ATM)) and Worksystem Costs (User and Computer workload, here the respective workloads required to manage the air traffic this well). Safety critical Systems require Task Quality to be high; but User Costs to be acceptable for maintaining Task Quality this high. PD and PA are, thus, not uniformly ‘high’. Indeed, reducing User Costs to increase traffic expeditiousness, while maintaining traffic safety would be expected to express a typical ATM Design Problem.

and the existing system is highly redundant so as be virtually error free in the

application domain of real aircraft flying around safely, although PA with respect to

expeditiousness may be compromised, as demonstrated by Dowell (1998). What John Dowell

reports, long after the end of the TOM Project, is a generic ATC model that comprehensively

applied the Conception and which provides an extended demonstration of using it. The research

application involves a simplified ATC paper flight strip system simulation which, using ATC

professionals as subjects, does produce cost metrics for both PA and PD. While there is much to

commend it, particularly its “worksystem” model that I return to below, I continue to have some

concerns about both PD and PA. John Dowell recognises that there is a problem with specifying

PD and cleverly and plausibly argues that it can be set as for a single aircraft’s performance flying

through an otherwise empty airspace. ATC interventions are thus zero, as are PD costs, except, I

think, for a monitoring function, i.e. that the airspace does only contain a single aircraft. He then

is able to calculate values for PA by analysing task performance in the simulations; PA costs for

expeditiousness, but not safety, increase as the number of aircraft in the airspace increase.

In Dowell (1998), however, such costs are expressed as “unit-less” values, whereas the senior

decision makers of ATC’s future will ultimately want quantification that they can understand,

probably money, or, at least, the improved ratio of ATC personnel to aircraft safely and

expeditiously controlled.

Comment 16Senior, future ATM decision makers can choose to want anything, along with pilots, air traffic managers, airport managers, airline shareholders etc. Such wants, if expressed for the purpose of change, would normally be considered in terms of user/client requirements of different sorts. HCI Engineering Principles, as conceptualised by Dowell and Long, however, solve design problems. They do not satisfy (all or necessarily any particular) user/client requirements. This raises the issue of the relationship between design problems and user/client requirements.

One possible relation is that user requirements and design problems are one and the same thing. That is, there is no difference between them. This view, however, is rejected. Following the HCI Discipline and Design Problem Conceptions, a design problem occurs, when actual performance, expressed as Task Quality and Worksystem Costs ( User Costs and Computer Costs), does not equal (is usually less than; but not necessarily) Desired Performance, expressed in the same way. In contrast, user requirements have no such expression or constraints. User requirements may even conflict or otherwise be impossible to meet.

This difference indicates that user/client requirements and design problems are not one and the same concept. Rather, it suggests that design problems can be expressed as (actual or potential) user/client requirements; but not vice versa.

Of course, if anyone wants to increase the scope of Design Principles, they are welcome to try; but they need to demonstrate how the added scope can be integrated into the Conception for Design Principles, either those proposed by myself, Stork (1999) and Cummaford (2007) or others to be specified.

For a more complete description of the differences between user/client requirements and Design Problems – see this website, Section 3, Subsection 6 – Frequently Asked Questions.

I stress that it is only my later general systems research that leads me to

this sort of argument and I, and I think most others in HCI, have not seen our work in an

adequately large systems context. The 1998 paper does not deal with new designs, but only

presents data from the simulated current system, but clearly one could take a new design and

apply the theories and methods to evaluate it against the current system. The new one may be

demonstrably better, but the issue is whether it is significantly better isn’t answered, for example,

how many more aircraft could be controlled by ATC personnel and, of course, in the end, can the

financial costs of change be justified for the predicted benefits of change?

Comment 17This point has been addressed earlier in Comment 16.

A related point was

made in Hockey and Westerman’s (1998) commentary paper on Dowell and Long, where, while

lauding the “explicit involvement of costs as part of the performance/usability metric” and that

these should provide “a more effective basis for the comparison of alternative systems”, they

warn that “it will not be possible to say how much increase in performance quality is equivalent

to how much more required effort or time, or how much more long-term effects of ‘fatigue, stress

and frustration’.”

Comment 18The case has to be made here for ‘equivalence’, including the rationale and whether it is part of user/client requirements or the design problem or indeed both. In the Conceptions, Desired Task Quality and Worksystem Costs (User and Computer) are assessed against Acceptable Actual Task Quality and Worksystem Costs (User and Computer). It would be useful to indicate how this point is ‘related’ and to what more precisely.

My view on quantifying PA for an extant system, provided one uses relative metrics, e.g. unit-less

ones, is that it is not a problem for nearly all task analytic sorts of approaches, provided, also,

they are intelligently applied. N.B. “extant” may include simulations of new designs, provided

that simulation fidelity is adequate for what is being investigated (e.g. Diaper, 1990). There is a

potential problem, however, that different methods will sometimes produce estimates of PA that

are so different that different conclusions are reached. Out in the messy world of software

development I doubt this is a problem, but may be so for academic theoreticians concerned with

the properties of methods, metrics, philosophies and associated exotica, which certainly includes

the Dowell and Long Conception and, I’m afraid, much of my own theoretical research.

Comment 19The problem of measurement reliability has been with us for a very long time and is likely to remain with us for even longer. Diaper’s scenario, however interesting, in itself or with respect to problems of measurement, does not strike me as a good starting point for the acquisition of HCI Engineering Design Principles. See also Comment concerning hard and soft design problems.

On the other hand, I think there are many problems with the quantification of PD. It may seem an

exotic point, but I cannot see how it is possible to set a PD that is independent of a radically new

design. For example, the PDs for an automated flight strip system and a fully automated AI ATC

system that had no human controllers, and all their associated capital and infrastructure costs,

must be radically different. While paper and electronic flight strip systems might have identical

PDs, this would only seem so provided that neither the application domain or the boundary of the

work system (see below) are changed, for example, if electronic flight strips are simply slotted

into an otherwise unchanged system. If a more radical design changes the application domain,

say, changing air corridor properties such as minimum vertical and horizontal separation, then

there must be, at least, minor changes to PD to reflect this. A people-less, AI ATC system might

change both work system and application domain far more, for example, there may no longer be

any handover processes as currently occurs between human controllers in different sectors, the AI

system staying with each of its aircraft from departure loading to arrivals unloading. In such a

case PDs related to in-flight handovers would simply be irrelevant, whereas they are crucial with

current systems. I can easily imagine future scenarios of distributed, interacting AIs where what

is now ATC is something so completely different that today’s ATC officers would not be able to

even recognise it. It might be unimaginative to suggest that whatever the technology, in the end,

aircraft have to be controlled and so future ATC systems would still be comprehensible with

today’s knowledge. I retort that what happens if instead of controlling aircraft, what is controlled

are the status designations of dynamically changing, perhaps quite small, volumes of airspace,

which gives each aircraft’s autonomous AI constantly and rapidly updated flight path options?

Such ATC by airspace control is beyond human capabilities in realistically loaded ATC systems,

but there are some tasks that computers are better at than people, one example being volume (pun

intended) micromanagement.

Comment 20Why not relative measurement, as for PA? However, the more radical a design, the less appropriate Design Principles are likely to have been acquired, the less available for application and so the less likely to be able to make a contribution to the radical design. Use of the Conceptions for descriptive purposes, however, would not be excluded in the latter cases.

One concern I have is where measured PA exceeds PD.

Comment 21If measured PA of an existing Worksystem exceeds PD, then, following the Conceptions, the design problem would be to reduce PA to PD. This might be thought an unusual case; but presents no difficulty for the Conceptions. An example might be electronic customer check-outs in supermarkets, which are replacing people-operated check-outs. Electronic checkouts are known to be slower; more error-prone; less secure; and with less workload for customers. Supermarkets are well aware that customer service has been degraded by electronic check-outs; but are willing to trade this for a reduction in check-out staff.

For example, it was recognised decades

ago (e.g. Sell, 1985) that a diagnostic medical expert system could perform better than any

individual human doctor, because it was built from the expertise of a number of human experts.

Initially, PD can only be set by human expert performance in the absence of an implemented

expert system.

Comment 22PD could be set in many different ways, including relatively, for example, greater than current Worksystem PA.

Once implemented and its performance evaluated, PA now exceeds PD, even on

the measures specified in the initial PD, but until it is implemented, we cannot know on which

parameters and by how much PA is greater than PD. Subsequently setting PD to PA is the wrong

way round, PD is simply playing catch up if the design is iterated with further incremental

improvements in the expert system’s performance. In such a situation, PD cannot do the job

intended of it within the Conception.

Comment 23A Design Problem can be expressed as a performance deficit or as a performance excess. In both cases, performance is not as desired. Design Principles could be (in principle) acquired for both cases, although design concerns are more likely to be associated with the former. See also Comment 21.

Furthermore, where PA is high, then when performance is

thoroughly investigated then this is likely to add additional parameters to PA, which in

consequence would add qualitatively to PD, as well as the quantitative changes described above.

In summary, when not just analysing a current system, then the problem I have with setting PD is

7

that it will sometimes be dependent on a particular design. Therefore, in such cases, PD cannot

drive design, but can only follow design, having an evaluative rather than a design generative

function.

Comment 24This is indeed a complex issue. However, it must be remembered, that according to the Conceptions, PA/PD differences enable the diagnosis of a Design Problem. Currently, Design Knowledge of whatever sort (for example, Guidelines, Models, Design Practices, (maybe even in the distant future Principles)) drives the search for, that is, to prescribe, a Design Solution. ‘Drive design’ needs to be clarified with respect to these differences between Design Diagnosis and Design Prescription.

In the Conception it is necessary that a design principle always includes desired and actual

performance costs, yet there do appear to be problems with specifying and quantifying both PA

and PD if they are to contribute the Conception’s desideratum that design principles can generate,

as opposed to evaluate, designs.

Comment 25Principles provide classes of Design Solution to the classes of Design Problem, constituting their scope. They do not satisfy user/client requirements, which are either not design problems or are outside their scope. They do not ‘generate designs’ in general. They do provide class Design Solutions to Class Design Problems. Such Design Solutions constitute part of the design of a new or a re-designed Worksystem. Perhaps an example will help here:

The work of Cummaford (2007 and 2010) attempts to acquire a Design Principle, which has as its scope: ‘support for the exchange of goods for currency between some customer and some vendor’. This exchange is conceptualised and operationalised in terms of: negotiation; agreement; and exchange. The latter constitute the intended scope of the Principle to be acquired. Excluded form the scope are: product selection; product comparison; and post purchase interaction, for example, the return of goods. The latter are excluded on the grounds that they require information gathering and decision-making. These aspects of the design, then, require ‘best design practice’ or Diaper’s ‘imaginative, creative design processes as human psychological functions’.

For me this is a core issue, whether one can create designs using

principles or whether imaginative, creative design processes remain as human psychological

functions which are, better than now I hope, supported by principles, and methods, tools, etc., that

help evaluate putative designs.

Comment 26The Conceptions do, indeed, claim that ‘one can create designs using Principles’; but only those parts of a complete design that constitute Design Solutions to Design Problems within the scope of the appropriate Design Principle. This would support Diaper’s ‘imaginative, creative design processes as human psychological functions’. This support would have better effectiveness (that is, more likely to achieve a design having the Desired Performance), than other types of current Design Knowledge, such as Guidelines, User Models, Design Practices etc.

The difference between ‘hard’ Design Problems and ‘soft’ Design Problems is also relevant here (see Dowell and Long (1989) – Figure 2). Hard problems are those, which can be completely specified (for the purposes I hand, that is of design). Soft problems can not be so specified. Only hard problems, then, can be the subject and object of Design Principles, as advocated by the Conceptions. Only Design Principles for hard problems can support design practices of specify then implement. Design Principles cannot be formulated for, and so cannot be applied to, soft problems. However, design practices, which do not make the distinction between hard and soft problems, such as trial and error, specify, implement, then test etc, using Models etc can obviously be applied to both hard and soft problems, although with no ‘guarantee’ of solving either. It seems that there is will always be plenty of work to do for Diaper’s ‘imaginative, creative design processes as human psychological functions’

The Conception beyond TOM

John and I never again worked together on a major project after 1992 and, being very busy people,

failed to keep in regular contact. We’d bump into each other every few years at conferences and,

neither of us ever growing up, we’d pretty much start where we left off. John has chided me,

often when we’ve met, even unto sarcasm, for my failure to understand the Conception and, in

particular, what are its engineering design principles, which when applied would give a better

guarantee of performance than, for example, guidelines. I have admitted my repeated failure, on

the Conception and even more so on the principles, but I have made one more attempt, with

John’s help, for the purpose of writing this paper.

Comment 27This is, of course, much appreciated and constitutes the basis of my attempted clarifications by way of these comments.

I re-read the Dowell and Long (1989) Ergonomics paper. I still didn’t understand it, in some

respects. My difficulty is understanding what is the key concept, of the General Design Problem.

Comment 28Put simply, the General HCI Design Problem is what HCI Design Knowledge (such as Principles) is intended to solve, so prescribing an HCI Design Solution. The Conceptions are intended to support the acquisition of Design Principles, which can be operationalised, tested and generalised against an expression of the Design Problem (as indeed can any alternative type of HCI Design Knowledge (Guidelines, Models, Design Practices etc). Discipline agreement on the expression of the General HCI Design Problem would enable testing of these different types of HCI design knowledge against the same problem, so facilitating evaluation of their relative effectiveness and so discipline progress. Of course, alternative Conceptions to those of Long and Dowel could serve the same purpose.

I even wonder, still, whether it is about design. I want to use “design” as in a software

engineering lifecycle model sense, where I suspect that John’s sense is a more general one, of

everything to do with building something, or in his terms, ‘specifying then implementing’ and in

contrast to the activities of science or craft (Long, 1986).

Comment 29The contrast is, indeed, with (applied) science and craft However, ‘Specify then Implement’ describes the Design Practice associated with the application of Design Principles in the manner proposed in the Conceptions. ‘Specify then Implement’ can be contrasted with alternative design practices, such as ‘trial and error’, ‘implement and test’ or ‘specify, implement, test and iterate,’ associated with other types of Design Knowledge, for example, Guidelines, Models, Design Practices etc. The relationship between Design Problem and Design Solution in the case of Principles does not require (empirical) testing because it has already been conceptualised, operationalised, tested and generalised in the course of its acquisition. The same can obviously not be said for other types of Design Knowledge, for example, Nielsen’s Guidelines (2010), which are recommended by most HCI textbooks and are claimed to be used by practitioners. The issue of ‘everything’ in contrast to ‘scope’ is addressed in Comment 4.

John does admit that the Conception

has nothing to say about creativity, where designs come from,

Comment 30The Conceptions have a great deal to say about the Design Solutions provided by Design Principles and their associated Design Practice – see Comment 29. However, they have nothing particular to say about creativity in general, however, it may be understood by Diaper (or others).

yet for decades my design problem

has been Diaper’s Gulf (e.g. 1989c), between requirements specification and design.

Comment 31More precisely, user/client requirements are not the same as Design Problems, which form the starting point of the acquisition of Design Principles, which express the Design Knowledge, proposed by the Conceptions.

Noting that

requirement specifications are not the same as the Conception’s design principles,

Comment 32See Comment 31.

I do not see

how a requirements specification can generate a design, except by exclusion, or, sometimes

unfortunately, by copying previous designs.

”CommentThe application HCI engineering Design Principles could also be thought of as ‘copying’ in the sense used here. Since the Principles offer the best guarantee of solving Design Problems, as identified in User requirements, I would consider this (very) fortunate. See also Comment 26 for address of the general issue of design generation.

In a software engineering project a perfectly reasonable requirements specification might be that

the software must conform to Microsoft’s “Windows XP style”, so, no matter that it is a poor

piece of modern user interface design, we would expect the print option to be under the file menu,

where it is for historical reasons concerning the creation of a print file. This is design by copying,

but the requirement hasn’t generated the design, which was done decades ago,

Comment 34It is important to distinguish here the differences in the use of the term ‘design’. It is important to distinguish:

1. The design of “Windows XP style” (which indeed was done decades ago)and

2. The design to which “Windows XP style’ is recruited, which is currently being undertaken.

The same difference would hold (and so needs to be distinguished) for Design Principles. See also Comment 26.

and it certainly

doesn’t constrain the add-on solutions of having other ways to access print to overcome the old

design fault, that most user don’t understand why print is there, and many users probably don’t

realise it is there because they use other means to access print, such as via specialised, and

sometimes program specific, icons and buttons. Furthermore, I argue, that whether expressed

positively or negatively, or as declarative statements or as performance targets, non-copying-of old –

designs requirement specifications can only exclude some designs and cannot generate design.

My argument is easiest understood with the more modern performance requirements that are now

used in some international standards (ISO, DIN, etc.). Here, one might have a requirement that

users must receive a response within X seconds of their input. Clearly how this sort of

requirement is met by any particular design, quite deliberately, is not specified. So this sort of

requirement cannot generate design. With a requirement expressed as a declarative statement,

such as, ‘All pointing devices must highlight, select and show options’, then this excludes all

designs where this is not true, but it does not generate a design,

Comment 35Here, I agree with Diaper. However, whether Design Principles could or could not include such requirements remains an open matter. It certainly cannot be excluded at this time. See also Comments 26, 33 and 34.

whether it’s left and right mouse

buttons and single or double clicking, or how a graphic tablet stylus works. Furthermore, if I

were interested in interface consistency, I can imagine (design) a mouse with a single button that

mimics a stylus, so, for example, holding down the button would provide menu options rather

than making a selection, or vice versa, a stylus that had a button to mimic the right button

selection functions of a Windows-based PC’s mouse. My point is that such designs were not

generated by the declaratively stated requirement, but only that they are constrained by it and

could be evaluated with respect to it.

Comment 36For the address of generation and constraints with respect to Design Principles – see Comments 26, 33 and 34.

John’s response to aspects of the above is in two parts. First, that in areas like civil engineering,

then it is claimed that there are structural engineering design principles that are generative as well

as being evaluative. Second, and I agreed with him, my sort of software engineering requirement

specifications weren’t the same as his design principles, whether general or specific ones.

On the first of these, I am suspicious of the generative claim, I don’t really trust civil engineers as

applied cognitive psychologists, and the distinction is subtle between how designs are actually

produced, i.e. generation, which I think is about the psychology of designers, and the constraints

under which designs are produced, either by copying previous designs or explicitly excluding

some design options. I have come to agree with much of what people such as Prof. Gilbert

Cockton says about design, and, up to a point, designers (e.g. Diaper and Lindgaard, 2008;

Cockton, 2008). Furthermore, I have argued that we are missing, or there are very few of them,

experts about methods (Diaper and Stanton, 2004). These experts would not be specific method

developers, there are lots, even far too many, of those, but experts about the properties of methods

and all sorts of stuff about their application. As a humble example, I have for a long time been

undertaking taxonomic research concerning models that are strictly hierarchical from those that

are heterarchical; the latter have levels of abstraction but, in the mathematical sense, are not true

hierarchies because either nodes are repeated or they have more than one parent node (e.g. Diaper

2001b). I have applied this work widely, to the strict hierarchical decomposition required when

using Data Flow Diagrams or in Checkland’s (1972; 1978; 1981; Patching 1990) Soft Systems

Methodology (Diaper, 2000), to categorisation tasks, from card sorting methods to applications in

AI, and for text and dialogue analysis and to much of my work on task analysis and, later, my

general systems analysis research.

John has, of course, been involved in method development himself, for example MUSE (Method

for Usability Engineering; Lim and Long, 1994), but this work never convinced me that John had

really come to grips with the general problems with method design. No matter how worthy, on

whatever criteria, MUSE might have been itself, including integration with then current software

engineering practices, its adoption was only modest.

Comment 37It would be nice to see the evidence for this statement. See also Comment 10.

I moved to software engineering because

my solution was to try to directly modify its practices, adding HCI, human factors, cognitive

engineering, etc. from within, as an alternative to working towards a separate human orientated

engineering discipline that would support software engineering and IT development. In any case,

if I don’t think John has successfully got to grips with such method development issues, and I’m

not claiming I have either, only that I’ve done some research on some bits of the problems, then

I’m not willing to trust the claims of architects and civil engineers about requirement

specifications or design principles being able to generate, as opposed to evaluate, designs. In an

area of engineering with which I am familiar, software engineering, I cannot find examples where

requirements specifications are design generative, i.e. Diaper’s Gulf remains a problem. I’m not

sure that software engineering has design principles in anything close to the Conception’s sense.

John’s second point, that my sort of software engineering requirement specifications weren’t the

same as his design principles, led him to suggest I look at the work of his more recent doctoral

students, who had “made a good fist” of investigating the acquisition of such principles. Dr.

Steve Cummaford kindly provide me with several hundred pages from his unpublished doctoral

dissertation (see: Cummaford and Long, 1998; 1999) in response to my request, via John, that I

needed a simple example of such a design principle that I could think about concretely.

Let me start by saying that if I had been Steve’s Ph.D. examiner, I would have had no problem

with passing him, easily, except that his acronyms are even worse than those John and I, cheese

and cheese again, have inflicted on our readers over the decades. Nor am I going to produce a

critique of his doctoral work, but I do have an extremely crude characterisation, a mere thumbnail

sketch, that I offer as a partial explanation of why I still don’t understand John’s design principles

in detail.

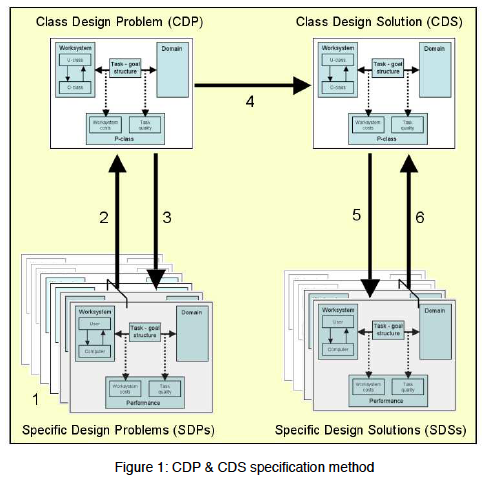

Figure 1 is from the dissertation’s Chapter 5: Method for the operationalisation of ‘class first’

(engineering design principle acquisition) strategy. When I looked at how this works, one

example is transaction processing from web pages, i.e. a customer buying products electronically,

then on the left side of Figure 1, one does some task analysis, one could choose from many

different methods which would be more than adequate if intelligently applied. The task analysis

is carried out on a number of tasks, and performance data, objective and subjective, is collected.

The results are abstracted, and the specific and higher level class analyses can iterate. It’s the

same on the right of Figure 1, but this time it is design solutions that are the subject of the task

analyses and abstractions. What I don’t understand is the arrow labelled 4. Steve recruits one set

of design heuristics, which are not the Conception’s design principles, but one could choose many

other sources, and while this is all fine in the messy world of real software design, it does not

address my theoretical concern of how an abstraction based on a current system can generate a

new design solution.

Comment 38

Figure 1 is taken from Chapter 5 of Cummaford’s thesis. It shows the method by which the Class Design Problem and the Class Design Solution for Cycles 1 and 2 of the Design Principle were developed. It does not show the Principle itself.

The Principle itself is specified in Chapter 9 ‘The EDP (Engineering Design Principle) scope defines the boundary of applicability of the EDP. The scope comprises a class of users and a class of computers, which interact to achieve a class of domain transformations within a specified class of domains. The EDP is defined by generification of the commonalities between the Class Design Problem and the Class Design Solution. These commonalities comprised the following: domain model; Product Goal;User Model; and Computer Model…….

The EDP prescriptive design knowledge is now synthesised from the non-common aspects of the Class Design Solution and the Class Design Problem…..

See also Comments 26, 33 and 34.

Once the system has been designed, it can be evaluated, even a paper

prototype can be evaluated, but the roles are evaluative and not design generative.

Steve actually goes as far in his first chapter’s first paragraph to state that his research “offers the

promise of reducing development costs incurred whilst solving problems for which solutions have

already been specified” (p31, my emphasis) and he admits in the final Chapter 13 (Strategy

assessment and discussion) that “the form of their (engineering design principles) expression

remains an issue to be addressed.” (p321), so my problems remain unaddressed as I still do not

have a simple, well expressed, exemplar design principle with which to work.

Comment 39Methinks Diaper doth protest too much here:

1. Many of Diaper’s problems have indeed been addressed (see Comments 26, 33, 34 and 38.

2. The form of the initial, putative, design principles are well (enough) expressed for the purposes of the current (and future) research.

3. The EDP expression for the purpose of designer application, however, does remain to be completed (wire model expression is the current favourite, which we are working on).

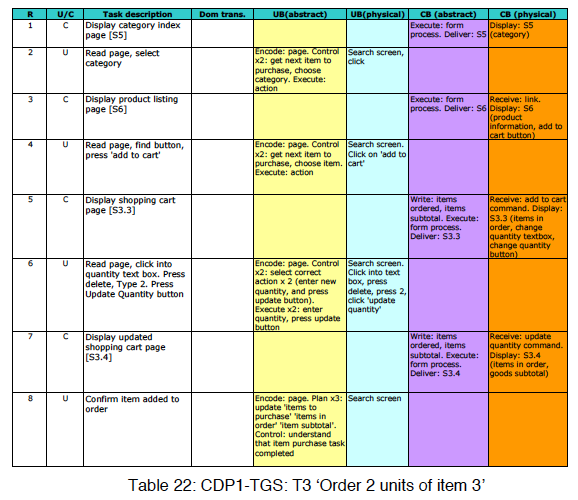

One issue with Steve’s task analyses is that they are basically a task dialogue model between user

and computer. This can be seen in the analyses where one of these agents acts, alternating from

one to the other. As can be seen in Table 22 from Steve’s Chapter 8 (p123), odd numbered task

lines concern the computer’s behaviour (CB) and the even numbered ones user behaviours (UB).

R U/C Task description Dom trans. UB(abstract) UB(physical) CB (abstract) CB (physical)

While such task dialogue models are extremely common, and I’ve frequently used them myself,

they present an especial problem in the context of the Conception, beyond the usual problems that

such models are sometimes poor at capturing parallel performance in agents. The problem is that

the Conception proposes that it is the interactive work system that performs work by transforming

the application domain and in Steve’s example the work system is the combined operation of user

and computer, whereas the analysis separates the two agents. Thus, the two agents’ performance

has to be subsequently combined to form an interactive work system model, but it would surely

be more appropriate to perform the task analysis at the interactive work system level in the first

place: it is conceptually neater; will usually provide different insights from a task dialogue level

of analysis; and will probably be more efficient.

Comment 40This is an interesting suggestion and may have some advantages, as Diaper suggests; but for Task Quality. Worksystem Costs need to be specified separately for User and Computer. Not clear the advantages for the latter case, indeed quite the reverse, as no distinction could be made.

In contrast, Dowell (1998) does present an interactive work system model at this level and not at

the level of its components,

Comment 41Diaper is correct here. However, Dowell’s pioneering research was published in 1998 and Cummerford’s in 2007. The differences might properly be assigned to progress of the latter, enabled by the former.

although I might disagree with the claim made early in the paper

(p746) that “costs may be separately attributed to the agents of a worksystem”, as opposed, as I’d

prefer, to the work system itself.

Comment 42See Comment 40. It is most definitely not a matter of preference for the Conceptions, however. User Costs and Computer Costs may figure differently in the expression of a Design problem. For example, in this time of increasing computer power, one might expect reducing User Costs to continue to appear in many design Problems; but to be accompanied by an acceptable increase in Computer Costs.

Setting aside this minor cavil, John Dowell uses a blackboard

model of his worksystem that does not separately model its components, so neither controllers or

flight strips are visible in his worksystem level model. Blackboard models were commonly used,

particularly in Andy Whitefield’s work with John Long (e.g. Whitefield, 1989), when I was at the

EU in the early to mid 1980s, and although I’m not a particular fan of them myself, I think John

Dowell is absolutely spot-on to express his worksystem model without it containing things like

ATC officers and flight strips. My own, later approach is in one sense less sophisticated in that it

simply ignores what is within a particular work system boundary, treating the whole work system

as the agent that transforms the application domain.

Comment 43See Comment 42.

John Dowell and I agree about the

importance of specifying a work system’s boundary, which is an analyst’s separation of it from its

application domain, although we have minor methodological differences, with John Dowell

suggesting that the boundary, at least in part, is determined by the application domain, whereas I tend to stress its definition by the “communicative relationships” that cross the boundary and

which, for me, are primary data for systems analysis at a particular level of work system.

A more major difference I recall discussing with the Johns on the TOM project was whether the

work system was a part of, a subset, of the application domain or, as in the Conception, separate

from it;

Comment 44The Conceptions are very clear on this point. The Worksystem and its Domain of Application are indeed modelled separately and necessarily so. The reasons for this are two-fold.

First, Performance is expressed by Task Quality and Worksystem Costs (User Costs and Computer Costs – see Comments 40 and 42). Task Quality is derived from the model of the Domain, that is, how well the Domain transformation is carried out by the Worksystem. Worksystem Costs are derived from the model of the Worksystem, that is, the Costs of carrying out Domain transformations of this quality.

Second, Task Quality and Worksystem Costs appear separately in the Design Problem and indeed are likely to be competing as concerns the design itself.

Benyon (1998) suggests a third alternative, “to see the worksystem as including the

domain” (p153, his emphasis). My view was, and still is, that everything in a model of a system

is the application domain, except that part of the system that is within the work system boundary.

Comment 45This claim needs to be supported by the sort of reasoning, which appears in Comment 43. Without such a rationale it is not possible to assess this claim.

This position has lead to my later systems approaches differing significantly from most of the

other John Long inspired work on this, as it allows simultaneous, multiple, overlapping work

system boundaries, whereas the other work tends to have only a single work system. The next

section elaborates on these issues.

Comment 46As it stands this claim is unclear. It will be addressed later, following its more detailed expose below.

General Systems Analysis with Work Systems and Application Domains

TAKD’s requiem (Diaper, 2001a) cleared my research table on task analysis so that I could

invent something better. My first criterion for a better approach was to address the delivery

problem, in particular, how to make it usable for IT professionals of many types who were not

human factors experts. Boring from within, I had experimented with the Pentanalysis Technique

(Diaper et al., 1998), which extended the widely used Data Flow Diagrams (DFDs) software

engineering modelling technique so that a task analysis would have an output that mapped

directly to DFDs. It works, but I didn’t pursue developing the Pentanalysis Technique, I

published just the one paper on it, because I had other criteria to fulfil.

My second criterion was that I wanted to improve model validity, validity being defined as the

relationship between the assumed real world and a model of it (Diaper, 2004).

Comment 47My preferred concept of validity is: conceptualisation; operationalisation; test; and generalisation of the model (or method) – see Long (1997). This preference also holds for the substantive and methodological components of an HCI Engineering Design Principle.

Not only would

the approach meet my philosophy of “pragmatic solipsism” (I can’t prove the world exists, but

I’ll assume it does for scientific and engineering purposes), but it must cope with an incertae

sedis world in which things do not fit neatly into an analyst’s hierarchical model, i.e. my new

approach would, where necessary, support a model of levels of abstraction that was, when

appropriate, heterarchical (Diaper, 2001b).

Comment 48As an aside, Dowell and Long assume the need for the Domain model to include, where necessary, natural as well as (strictly) hierarchical components.

My third criterion was that as a computer scientist, for a number of reasons, I wanted the

capability to produce a formal model, i.e. in mathematics/logic.

Comment 49Although models expressed in mathematics or logic may well be formal and are considered such by Diaper and Software engineers more generally, I consider formal also to include models, whose form can be reliably expressed and agreed by any group of modellers. This is clearly a relativist view and out of step with that of Diaper and Software engineers. Of course, much depends on the interpretation of the term ‘reliably’ here. My view is also shared by Salter (2010), for those readers interested in a reference.

I also knew that many people

find mathematics difficult or, for some, impossible. This seems hardly a controversial claim and

is sufficiently accepted that when we set up the journal Interacting with Computers in the late

1980s, we had a policy, which is still extant today in the journal’s advice to authors, that

mathematics, logic and other such stuff, when included in a paper, must also be “clearly described

in plain English.” So I developed Simplified Set Theory (SST) for the “mathematically

challenged”, who with it could do: logic using a graphical method based on Venn diagrams as

opposed to equation manipulation; procedural equation manipulation, following a method based

on subset identity and substitution, rather than using the full set of Set Theory’s algebraic

operators; and the automatic conversion of SST equations into, approximately, English. A

method of using SST was my Simplified Set Theory for Systems Modelling (SST4SM) approach

(Diaper, 2004). Another use of SST was to provide a formal modelling system for Checkland’s

Soft System Methodology’s Conceptual Models (Diaper, 2000) and with this, also for DFDs.

My fourth criterion for a better approach was that it should be a general systems analysis

approach, rather than specifically a task analysis one; I was by this time Professor of Systems

Science and Engineering, I chose my own title, and would always point out when meeting people

for the first time that it was systems, in general, and not only computer systems, that I studied.

Studying complex systems, I eventually returned to the basics of systems theories and almost its

simplest example of a heater with a thermostatic switch, say a bimetallic strip. This has led to a

logical AND gate model that is multimedia, the AND gate has both electrical and thermal inputs

and, importantly, the critical entities that form AND gates are the connections, electrical wires and

energy transfer functions, and not the objects such as heater, thermostat and so forth which are the

first focus of most HCI and software engineering approaches, including most of my own. This

theoretical research provides a basis for my more sophisticated work system and application

domain models, particularly with respect to studying “communicative relationships” across work

system boundaries.

My fifth criterion was that because psychology is so very, very difficult, even John and I struggle

with it after decades, then what was needed was a psychological approach that could be

understood, and, importantly, effectively and efficiently used by non-psychologists, say by

professional IT developers and their managers. My vehicle here was scenarios and starting with

Carroll’s (2000) proposals, I argued that scenarios were just imagined tasks, just task simulations

(Diaper, 2002b) and I subsequently developed the All Thought Is Scenario-based (ATIS)

hypothesis (Diaper, 2002c) which, in a nutshell, says that when we think, what we do is tell

ourselves stories (scenarios); this is how we perceive, recall/recreate memories, plan, etc. The

basic idea can be traced to the psychologist Jerome Bruner (1990) and has, for example, been

investigated in narrative approaches to task analysis (e.g. Rizzo et al., 2003).

My sixth criterion was to develop a means to predict the future, because design, whatever else it

involves, is inherently about predicting the future. This, of course, is impossible. In any case, I

don’t believe in the future, an old slogan of mine is, “The past is as mythical as the future.”, and I

only believe in the present world of now, which I designate as t0, as a pragmatic (in the William

James (1907) sense) assumption. What is possible, however, is that one can predict futures in the

plural (e.g. Diaper and Stanton, 2004; Diaper and Lindgaard, 2008). The supporting ideas come

from Chaos Theory where future states are restricted but that at any time t0, then it is impossible

to predict the state at t0+n. Chaos Theory allows micro-causality (strict determinism) but not

macro-causality. For example, a hinged pendulum will swing like an unhinged one most of the

time, but “can show a wide range of unpredictable, capricious movements” (Peterson, 1998, who

provides a non-mathematical introduction to Chaos Theory in chapter 7).

In my approach, which I’ve presented at seminars but not published since I retired as a full time

academic in 2006 (I’ve been meaning to write a book), the future is not a fog, but will contain

islands of stability, possible futures, which will be described at higher levels of abstraction the

more distant in the future is each island. The intention is to model these islands of the future

using scenarios, since that is how people, including designers, think according to my ATIS

hypothesis. The basic idea is that if a good general systems model at t0 can be developed, then

better future scenarios can be developed than if a relatively poor model of the world at t0 is used

by designers. I am far from understanding all the ramifications of this futures modelling

approach, but I hope to interest John in it one day, because it might provide a means to address

how his design solutions could be generated from his design problems, in contrast to my several

arguments above that requirements cannot generate designs.

Comment 50See Comments 26, 30, 33, 34 and 38.

My seventh criterion arose from the work I’ve been doing in Computer Supported Cooperative

Work (CSCW) since the late 1980s, and which has been my main application area, providing testbeds

for many aspects of my research. It is a myth that even the older task analysis methods,

such as HTA (Annett et al., 1967; 1971; Annett, 2003; 2004), and TAKD, cannot adequately

model collaborative work. Most task analysis techniques, however, are about how people

perform tasks, usually people doing so using tools. I think there are historical reasons for this,

that task analysis was developed by psychologists, and that until recently the only active agents,

the things that perform tasks, have been people. Erik Hollnagel (2003) has suggested that some

intelligent software can act as autonomous agents, although, as is so typical, I’ve argued for a

more extreme position (Diaper, 2004; 2006; Diaper and Sanger, 2006), that many things, not

necessarily involving an intelligent agent of any sort at all, can act as agents and should so be

modelled. This concept of analysing non-human agents is critical for my extension of the

Conception’s modelling of work systems and application domains.

Comment 51I assume Diaper is here referring to the Design Problem Conception (Dowell and Long, 1989). To avoid any misunderstanding, any so-called extension should be set out with respect to the whole Conception. Otherwise, it is unclear which parts of the Conception are espoused; which parts are not espoused; and which parts are added by way of extension.

A work system is the agent that performs work by transforming the application domain, and the

consensus, which I question (e.g. Diaper and Stanton, 2004), but won’t here, is that agents are

purposive, that is, they have goals and they attempt to transform the application domain to

achieve these goals.

Comment 52It is worth setting out a full citation of the Dowell and Long (1989) Conception on this point:

‘2.3.1 Interactive Worksystems: Humans are able to conceptualise goals and their corresponding behaviours are said to be intentional (or purposeful). Computers, and machines more generally, are designed to achieve goals, and their corresponding behaviours are said to be intended (or purposive1). An interactive worksystem (‘worksystem’) is a behavioural system distinguished by a boundary enclosing all human and computer behaviours whose purpose is to achieve and satisfy a common goal. For example, the behaviours of a secretary and wordprocessor whose purpose is to produce letters constitute a worksystem. Critically, it is only by identifying that common goal that the boundary of the worksystem can be established: entities, and more so – humans, may exhibit a range of contiguous behaviours, and only by specifying the goals of concern, might the boundary of the worksystem enclosing all relevant behaviours be correctly identified.’

The reader is at liberty to relate Diaper’s claimed extension to that part of the Conception, which is assumed to have been extended and to judge for themselves whether the extension is well-formed.

The work systems in the TOM project, or those in Steve Cummaford’s

dissertation or in Dowell (1998), consist of a number things, at least one human, but also

computers and other objects. As the agent, however, it is the work system that possess the goals

pertaining to desired application domain change and not any one or more of its constituent

components. This is part of the Dowell and Long (1989) Conception, and the part which has

most inspired me.

Comment 53See Comment 52.

When doing a general systems analysis, i.e. my much improved alternative to task analysis, which

I originally called SST4SM and which, with extensions, I have now renamed the SAM (Systemic

Analysis Method; Diaper, 2005), what has to be established, for some particular duration, is the

boundary that separates that part of the system that is functioning as a work system agent, from

the rest of the system, the application domain, which is changed. This is different from most task

analytical approaches where it is people who are agents, and does produce a different sort of

model from the task dialogue one discussed in the previous section. As with John Dowell’s

(1998) blackboard model mentioned above, in the SAM a work system, say consisting of a user

and a computer attempting an e-commerce transaction, would together possess the goals of

changing the application domain by successfully purchasing goods.

Comment 54The latter claim is consistent with the citation in Comment 52: ‘An interactive worksystem (‘worksystem’) is a behavioural system distinguished by a boundary enclosing all human and computer behaviours whose purpose is to achieve and satisfy a common goal.’

Overlapping in time,

however, at a lower level of analysis, shifting the work system boundary so that it is coextensive

with only the user, then in the SAM the user’s computer becomes the application domain and the

user has goals about changing it, so that at the higher level, together, they can change the goodspurchasing

domain.

Comment 55This is a very interesting idea. For its consistence or not with the Dowell and Long Design Conception- see the citation in Comment 52.

In these general systems models there can be more than one work system

active simultaneously, although, as always, this is tricky to model, and these may persist for

different durations, but more importantly, for any moment (ti) the system can be described at

different levels by the analyst selecting different possible work system boundaries, each boundary